Notes from Observability Roundtables: Capabilities Deep-dive

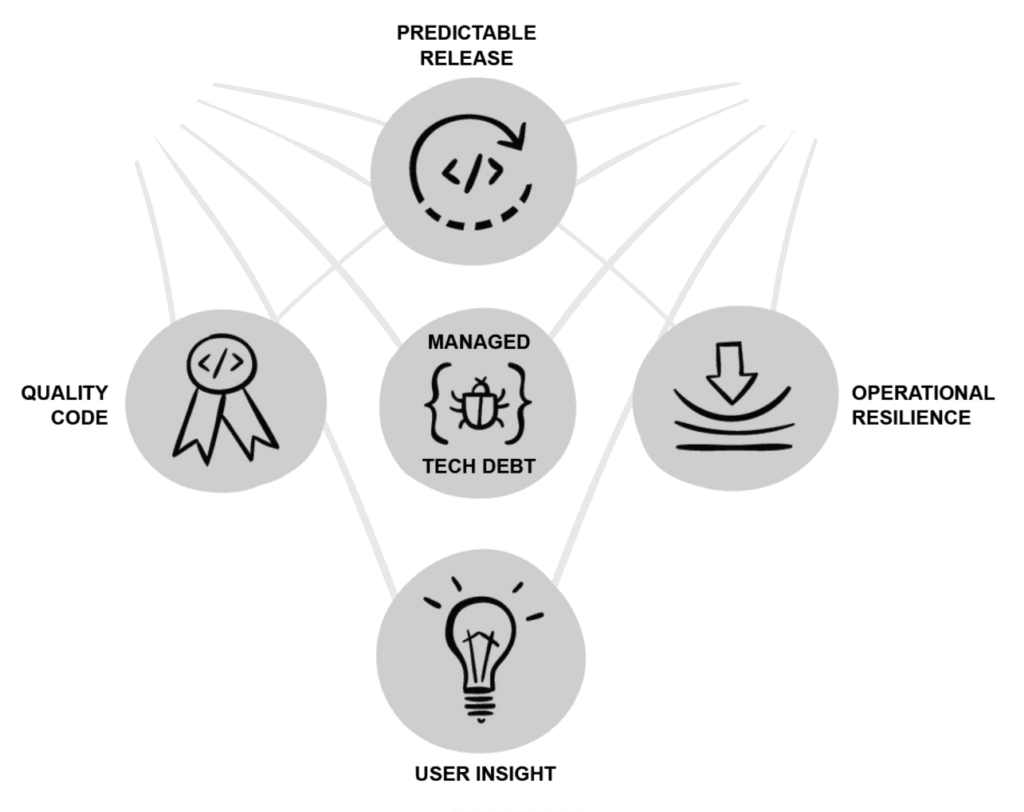

By Peter Tuhtan | Last modified on October 1, 2019Greetings, fellow o11ynaut! You may recall a post we shared here about two months ago that told tales of the themes we felt best represented our recent release of the Framework for an Observability Maturity Model. Well, the o11y maturity model was once again the primary topic and focus of Honeycomb’s most recent Observability Roundtable event held in San Francisco in mid August.

An audience comprised of Honeycomb team members (including myself!), users and non-users of Honeycomb, and the fine folks at Blameless came together to discuss each of the five key capabilities an organization looks to achieve excellence in when adopting the maturity model.

Our attendees split into five teams to dive into the weeds on each subject; individuals shared their interpretation of the capability, how it is practiced (or how they’d like it to be someday) in their org or on their own, and any and all thoughts or feelings towards the topic. Here are some of the key soundbites and dialogue that each of the five teams captured, both from the notes and/or my perspective:

1 Quality Code

or

What supports this capability?

- Religiously use test environments, staging, and canaries.

- Always aim to have a correlation ID and way to follow a request.

- Write debug info to a ring buffer (ring buffer logging) and attach them to your event “tail-based sampling”.

- Scope debug messages with feature flags so you can write out everything for *N* users.

2 Predictable Release

What is the connection between predictable releases and o11y?

3 Operational Resilience

What is resilience?

- the ability of an organization to respond and prevent issues, given the control of production environment

- enabling people to quickly fix issues without breaking much

- the ability to change mean time between failure and mean time to recovery

- the extent to which things are going wrong and the system remains fine

- adaptability (capacity) of complex system to unknown-unknowns

- how a system continues to provide resistance to failure while maintaining adequate functionality

- being prepared for failure

- the distributed dependency on people (the "hit by a bus" factor)

Why we want a resilient product

- in distributed systems, it's too much for operations to be experts on

- perhaps it's time that developers become the first tier (application failures) while operations become the next tier (state failures)

- expertise needs to be distributed — one model is to shrink operations and instead have the combination of infrastructure + consultant + domain expertise

- specialists have the responsibility to share their knowledge

Post-mortems

- goal: remediate, document, respond, and create predictability

- should facilitate knowledge spread

- hard to track, i.e. write once read never

- efficiency trade off on analysis vs action item

4 User Insight

- Always start an insight gathering process within your own team, by identifying and agreeing on what problem we’re trying to solve or value we’re trying to provide.

- Define what your user insights should be used for..

- Feature specific? Product overall? Product vision? Immediate roadmap?

- Ideally have your users already separated into personas that fit your product’s persona patterns (eg; power users, core users, delinquent users, indirect users, etc.)

- If magically possible, instrument all the things.

- Prioritize instrumentation, namely for the user events, that you want to measure against your success criteria/objectives and purpose that the feature(s) should be serving.

- Very important to account for as much quantitative insight as possible. Understand that by agreeing on as much qualitative insight as possible (the things the data won’t be able to tell us).

- Identify user insights that can feasibly reduce or prevent technical debt ASAP (eg; reinforcement/vindication in cutting scope that would otherwise be included and eventually be unused/misused).

- Triage all insights on a frequent cadence and act upon them according to priorities.

5 Managed Tech Debt

Taxonomy of tech debt:

- Velocity of shipping

- Outages

- Alert fatigue

- Latency

- Legacy/Abandoned code

- Cloud compute costs

- Specific people being repeatedly interrupted, downstream effects, excessive toil or dependencies

How do you communicate the effects of not fixing debt?

- Need to clearly examine/communicate consequences for tech debt

- Make the pain concrete

Sometimes, technical debt can be a good thing :)

- It's important to allow for it to exist as a young organization — can’t let everything slow you down.

- Clear division between “this is debilitating to the product’s use” and “this isn’t going to stop the world from turning.”

- As growth in an organization hopefully occurs, it is time to pay close attention to time spent on new releases vs tech debt. Pay close attention to when things begin to move slower, GA dates begin to consistently slip.

How to avoid compounding known and unknown or forgotten tech debt?

- Document everything into one source of truth.

- How can we measure the impact of tech debt?

- Slowdown in development

- Incidents

- How to measure engineering time?

- Look for repeated failures and try to measure them

- Document poor processes to get time to change them

- Measure things like incidents

How we interact with tech debt

- Documenting the impact in a recordable format

- Allocating time to fix based on business data

- Analyzing risk and effort for tech debt

Until our next discussion

Related Posts

Introducing Relational Fields

Expanded fields allow you to more easily find interesting traces and learn about the spans within them, saving time for debugging and enabling more curiosity...

Real User Monitoring With a Splash of OpenTelemetry

You're probably familiar with the concept of real user monitoring (RUM) and how it's used to monitor websites or mobile applications. If not, here's the...

Transforming to an Engineering Culture of Curiosity With a Modern Observability 2.0 Solution

Relying on their traditional observability 1.0 tool, Pax8 faced hurdles in fostering a culture of ownership and curiosity due to user-based pricing limitations and an...