Tales From the Trench: Building With LLMs and Honeycomb

AI discourse these days is all over the place. Depending on who you talk to, AI’s are absolute flash-in-the-pan junk, or they’re the best thing since sliced bread.

By: Austin Parker

8 Best Practices to Understand and Build Generative AI Applications Effectively

Learn MoreAI discourse these days is all over the place. Depending on who you talk to, AI’s are absolute flash-in-the-pan junk, or they’re the best thing since sliced bread. I want to cut through the noise, though, and see for myself what someone can do out here on the bleeding edge. Thus, I’m setting myself a challenge: write a usable—and useful—application with Claude Code, from soup to nuts. Here are the rules:

- Claude is going to do most, if not all, of the editing. I can give Claude direction, but I want to stay inside the CLI as much as possible (e.g., I should tell Claude to go research things or find docs rather than finding them myself and passing them in).

- It’s not done until it’s in production, which means Claude needs to handle tests, CI/CD, observability—the whole nine yards.

- I’m going to timebox this experiment to a weekend; no more, no less. I start Friday night and whatever’s done by bedtime on Sunday, it’s done. Crucially, I’m not going to adjust my weekend plans around the project.

With our ground rules established, let’s figure out our app! I’ve been pretty interested in Redis Vector Sets since they were announced, and this seemed like as good of an excuse as any to write an AI application that used them.

The idea of these vector sets is that you can use them to store things like text embeddings and look them up quickly. This is ideal for AI apps that do things like retrieval-augmented generation (RAG), or that save chat memories. Thus, an AI chatbot seemed like an obvious example application. I discussed the project with a coworker and he agreed to let me use his Slack chats as the personality for the bot (thanks, Phillip!), and with all that in mind, I sat down and fired up Claude Code.

New to Honeycomb? Get your free account today.

Get access to distributed tracing, BubbleUp, triggers, and more.

Up to 20 million events per month included.

Friday

I was pretty tired after the work week, so I sat there and thought for about fifteen minutes on a good name for the project (settled on phiLLM), created a directory for it, and then watched YouTube videos for an hour. At some point, I flipped back over and decided to kick off a Claude Code session.

> i'd like to bootstrap a slack app in this folder, but i'd like to do some design work on it first.

the goal of the app is to join a given channel, read all messages from a certain user, build embeddings from those messages, then use those to create an 'ai twin' of the individual based on their message history and posting style. we can use just openai for this. i'd like to use the newest redis for vector embeddings. i'd also like some suggestions about how to deploy the application and run it.My prompting style with Claude Code is, admittedly, pretty casual. There’s a lot that I could have done better here, but I find that I usually get good enough results on broadly-scoped projects like this by doing a few turns with the AI about ‘design work.’ Claude thought about it and eventually presented a pretty good plan:

> ## System Architecture Design

**Core Components:**

1. **Slack Bot Service** - Handles Slack API interactions

2. **Message Processor** - Extracts and processes user messages

3. **Embedding Generator** - Creates vector embeddings using OpenAI

4. **Vector Database** - Redis with vector search capabilities

5. **AI Twin Generator** - Uses embeddings to create personalized responses

6. **API Layer** - REST endpoints for interacting with the AI twin

**Data Flow:**

```

Slack Channel → Message Collection → Text Processing → OpenAI Embeddings → Redis Vector Store → AI Twin Response Generation

```

**Tech Stack Recommendations:**

- **Runtime**: Node.js with TypeScript

- **Slack Integration**: `@slack/bolt` framework

- **Vector Database**: Redis Stack (includes RedisSearch)

- **AI/Embeddings**: OpenAI API (text-embedding-3-large)

- **LLM**: OpenAI GPT-4

- **Framework**: Express.js or Fastify

- **Environment**: Docker containers

**Deployment Options:**

1. **Railway** - Simple, Git-based deployment with Redis add-on

2. **Render** - Free tier available, good Redis integration

3. **AWS ECS/Fargate** - More control, use ElastiCache for Redis

4. **DigitalOcean App Platform** - Managed Redis clusters available

**Key Design Considerations:**

- Rate limiting for OpenAI API calls

- Incremental message processing to avoid re-processing

- Privacy controls and data retention policies

- Webhook security for Slack events

- Vector similarity search optimization

Would you like me to elaborate on any specific component or start bootstrapping the project structure?I’ve found that I have better luck using Python for a lot of AI-authored projects these days (and I find it a bit more readable), so I corrected Claude into using Python and uv. The excessive agreeableness of LLMs aside, I find its rationale to be compelling.

> Yes, Python would work excellently and might be better for this use case. Here's why:

**Python Slack Frameworks:**

- **Slack Bolt for Python** - Official framework, same as Node.js version

- **SlackClient** - Lower-level SDK option

**Python Advantages for Your Project:**

1. **AI/ML Ecosystem** - Superior libraries (numpy, pandas, scikit-learn)

2. **OpenAI Integration** - More mature Python SDK

3. **Vector Operations** - Better performance with numpy for embeddings

4. **Redis** - Excellent `redis-py` and `redis-om` libraries

5. **Text Processing** - Rich NLP libraries (spaCy, NLTK, transformers)

**Recommended Python Stack:**

```python

# Core

- FastAPI (async web framework)

- slack-bolt (Slack integration)

- redis[hiredis] (vector database)

- openai (embeddings + LLM)

# AI/ML

- numpy (vector operations)

- pandas (data processing)

- sentence-transformers (backup embedding option)

# Infrastructure

- uvicorn (ASGI server)

- pydantic (data validation)

- python-dotenv (environment management)

```

**Performance Comparison:**

- **Python**: Better for ML/AI workloads, scientific computing

- **Node.js**: Better for high-concurrency I/O operations

For an AI-focused app with embedding generation and vector operations, **Python is the better choice**.

Would you like me to start bootstrapping the Python project structure?I yawned, told it to start bootstrapping the project, set all my tools to auto-approve, and went to bed.

Saturday

The rain was torrential, sparing me from outdoor activities. After breakfast, I pulled out my laptop and fired up Claude Code while sitting on the couch. A skeleton of my application had been created, but with no functionality. I gave Claude a few more notes: I told it to practice TDD, had it initialize a CLAUDE.md file with instructions on how to build/run/test the application, and had it analyze the project structure in preparation for implementation. Finally, I helped guide it with some more prompting:

> ok, let's begin. first, some details about each part --

- the slack bot is responsible for scraping a specific user's message history from slack channels that it's in, then passing those messages to the vectorizer/embedder.

- the slack bot also can write messages in response to questions or schedules. all messages will be ai generated through openai. we'll need to pass the embeddings into the chat completions, along with prompts, context, etcChecking timestamps, it took about eight minutes for Claude to write enough code that it thought it had a runnable implementation. While it was working, I went ahead and created a Slack App in our workspace and set up the necessary OAuth scopes for the bot to read channel history, user messages, and so forth. After setting the necessary environment variables, I fired up the application, and it immediately crashed.

> getting this when i try to run -

Traceback (most recent call last):

File "<frozen runpy>", line 198, in _run_module_as_main

File "<frozen runpy>", line 88, in _run_code

File "/Users/ap/Documents/code/phiLLM/phillm/main.py", line 5, in <module>

from phillm.slack.bot import SlackBot

File "/Users/ap/Documents/code/phiLLM/phillm/slack/bot.py", line 3, in <module>

from slack_bolt.async_app import AsyncApp

File "/Users/ap/Documents/code/phiLLM/.venv/lib/python3.13/site-packages/slack_bolt/async_app.py", line 48, in <module>

from .app.async_app import AsyncApp

File "/Users/ap/Documents/code/phiLLM/.venv/lib/python3.13/site-packages/slack_bolt/app/async_app.py", line 8, in <module>

from aiohttp import web

ModuleNotFoundError: No module named 'aiohttp'This is pretty common, I find. Even advanced models tend to fail at one-shotting a fully working application of any complexity. Claude did a good job of figuring out what went wrong and fixing it, though:

> 🤖 ASSISTANT [2025-05-31 14:59:20]

----------------------------------------

The error indicates that `aiohttp` is missing as a dependency for `slack-bolt`. Let me fix this by adding the missing dependency:

🤖 ASSISTANT [2025-05-31 14:59:30]

----------------------------------------

Now try running the application again:

🤖 ASSISTANT [2025-05-31 14:59:39]

----------------------------------------

The issue is that we're trying to create the SlackBot instance outside of an async context. Let me fix this by modifying the initialization:

🤖 ASSISTANT [2025-05-31 14:59:43]

----------------------------------------

Now let's test it again:

🤖 ASSISTANT [2025-05-31 15:00:02]

----------------------------------------

Great! The application is running successfully. The warning about the channel not being found is expected since the bot needs to be invited to the channel first. Let me quickly update to use the modern FastAPI lifespan events:I appreciate that Claude even noticed that it was using deprecated FastAPI methods and updated them on its own. After a bit more back and forth (Claude thought I needed to use channel names, but actually I needed channel IDs), I saw it pulling in messages and creating embeddings for them! However, there were some issues.

> hm. couple of weird things. first, it's only finding 14 messages in the target channel, which is too few. we need to enumerate the entire channel history. second, when i try to connect to the api locally it times out.After a bit more back and forth (and some debugging code that Claude added), I discovered that we were being rate limited by the Slack API. While poking around, I also noticed that Claude was using Redis 7, thus missing out on the point of the exercise: to use Redis Vector Sets!

> 👤 USER [2025-05-31 15:14:51]

----------------------------------------

also, i think we need to use the 'redis' image rather than the one you're using now, because we need redis 8+. please search for up to date info on redis vectors and redis 8.

🤖 ASSISTANT [2025-05-31 15:16:14]

----------------------------------------

Based on my research from both web sources and Redis documentation, here's what you need to know about Redis 8 and vector search:

## **Redis 8 Vector Features**

### **New Vector Set Data Structure (Beta)**

Redis 8 introduces **Vector Sets**, a new data type designed specifically for vector similarity search:

- Developed by Salvatore Sanfilippo (Redis creator)

- Similar to Sorted Sets but associates string elements with vectors instead of scores

- Optimized for AI use cases like semantic search and recommendation systems

### **Enhanced Performance**

- **Quantization support**: New int8 vector type reduces memory by 75% and improves speed by 30%

- **Supported types**: float64, float32, float16, bfloat16, and new int8

- **Multithreading**: All vector similarity requests now use multithreading

- **Binary quantization**: Additional compression options

## **Docker Image Updates**

You're absolutely right about the Redis image. Here's the updated configuration:Now, in terms of ‘things I could have done better,’ I was inadvertently signing myself up for a bunch of refactoring here. If I had been more specific in my initial prompts, Claude wouldn’t have wound up writing a bunch of code that targeted Redis 7 and basic cosine similarity search. This would come back to bite me later…

Moving on, I got into a loop with Claude where I’d look at the log output, then suggest things to Claude, who would go back and work on them, and so forth. It was a lot of stuff like this:

> 👤 USER [2025-05-31 15:18:04]

----------------------------------------

ok, one other thing - shouldn't you process message batches as you receive them rather than waiting for all of the batches to complete? there could be thousands of messages after allLooking back at my log, I spent a couple of hours going back and forth with Claude on tweaking our messaging scraping and parsing. Most of it was stuff that probably wouldn’t have been an issue if I had written better specs to begin with. For example, we didn’t handle paging or saving cursors for resumption, we didn’t have a way to check if we were done with the scrape, etc.

I also added in support to DM the bot and ask it questions, which led to a bit of back and forth around getting all that configured and loading in context. Eventually, I decided the best way to actually view what was happening would be to add tracing. Thankfully, Claude’s good enough now to get that working more or less in one shot.

> 👤 USER [2025-05-31 17:55:02]

----------------------------------------

that's better, yeah. let's add some o11y to this. please add comprehensive opentelemetry support. set up direct export (no collector) via OTLP to api.honeycomb.io:443. use HONEYCOMB_API_KEY from the .env file.It took about 15 minutes all told to get OpenTelemetry set up and working. I think it probably could have gone faster if I had used the zero-code instrumentation option rather than direct integration, but Claude wanted direct integration, so that’s what we did!

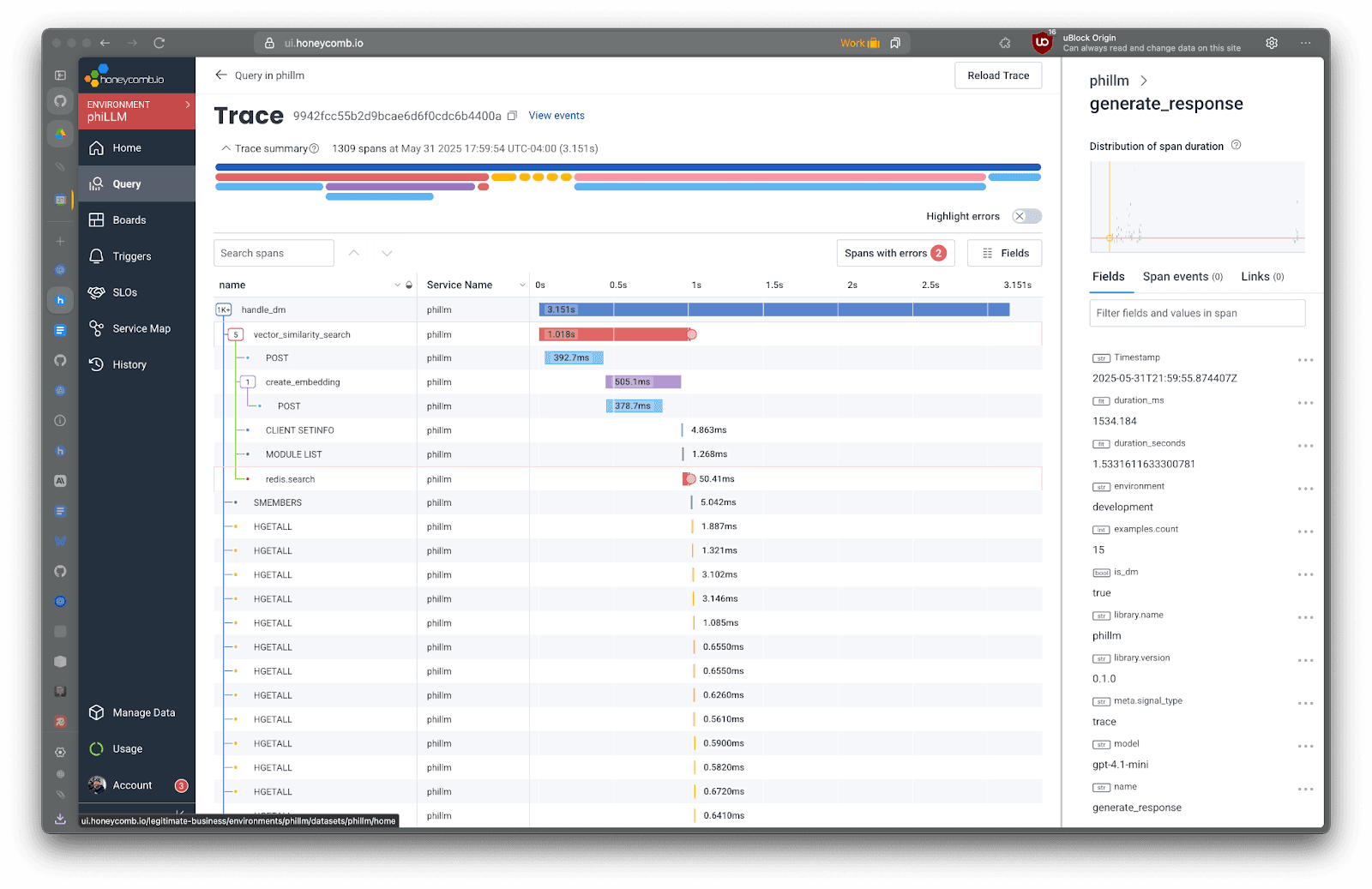

Once tracing was set up, I was able to pop into Honeycomb, where I noticed something was… off.

That didn’t look right to me. Shouldn’t we be doing a single vector search? At this point, I broke one of the rules of my challenge and started searching for how Redis 8 vector sets were supposed to work. I probably could have had Claude go do this research for me, but I find that it’s usually a poor idea to have Claude investigate things where you don’t have a good way to validate what should be happening.

> 👤 USER [2025-05-31 21:31:29]

----------------------------------------

ok, let's also work on our vector implementation. here's some important documentation on this feature: https://redis.io/docs/latest/develop/data-types/vector-sets/, https://redis.io/docs/latest/develop/clients/redis-py/vecsearch/, and https://redis.io/blog/level-up-rag-apps-with-redis-vector-library/Readers who are familiar with the concept of dramatic irony may realize that things are about to go very, very wrong.

> 🤖 ASSISTANT [2025-05-31 22:19:55]

----------------------------------------

Found it! There are **two different vector store implementations**:

1. **Old implementation**: `phillm/vector/store.py` - stores embeddings as JSON in `embedding` field

2. **New implementation**: `phillm/vector/redis_vector_store.py` - stores embeddings as bytes in `message_embedding` field for RedisVL

The application is using the **old VectorStore** for storing messages but the **new RedisVectorStore** for searching, which is why no results are found.

Let me check which one is being used in the Slack bot:After about 45 minutes of Claude and myself debugging (and also adding a few more features, like a debug page, saving memory to redis, and so forth), Claude realized that it had written a new implementation without migrating the existing code paths. This, I’ve noticed, is a somewhat persistent failure state for LLMs, especially during refactors. The model prefers writing new files rather than editing existing ones, but loses track of logical next steps, like completing the actual migration. You gotta watch out for that!

We went back and forth a bit on what to do next; Claude was trying to migrate the data over, I suggested we simply drop and re-scan the corpus.

> 🤖 ASSISTANT [2025-05-31 22:46:00]

----------------------------------------

Perfect! I have successfully updated the vector store tests to match the new RedisVectorStore implementation. Here's what was accomplished:So, it took about an hour and a half total, but we eventually found and fixed all of the deprecated vector operations. After this, I started to get curious. I had telemetry going to Honeycomb, and I have access to our Model Context Protocol server for Honeycomb (currently in Early Access)—could I have Claude work with Honeycomb to improve the code? Step 0: Add in more instrumentation.

> 👤 USER [2025-05-31 23:13:52]

----------------------------------------

we should also add the full prompt that's being sent to chatgpt... actually, can you search for automatic instrumentation for the chatgpt library in pypi? like https://pypi.org/project/opentelemetry-instrumentation-openai/This led me down a merry chase as well. I realized that, much earlier, Claude duplicated all of our code paths into a ‘telemetry enabled’ and ‘telemetry not enabled’ version because of a weird registration issue with the tracer. We went back and forth a bit on fixing that and consolidating down to a single execution path.

> 🤖 ASSISTANT [2025-05-31 23:45:58]

----------------------------------------

## Summary

I have successfully found and removed all conditional telemetry logic throughout the PhiLLM codebase. Here's a detailed report of what I found and changed:If you look at the timestamps, it seems like this took a while—and yeah, it kinda did, but I didn’t have to actively sit there and mind Claude for most of it. It just worked in the background. I’m confident that if I had better specified all this stuff earlier, I wouldn’t have even gotten into this mess to begin with!

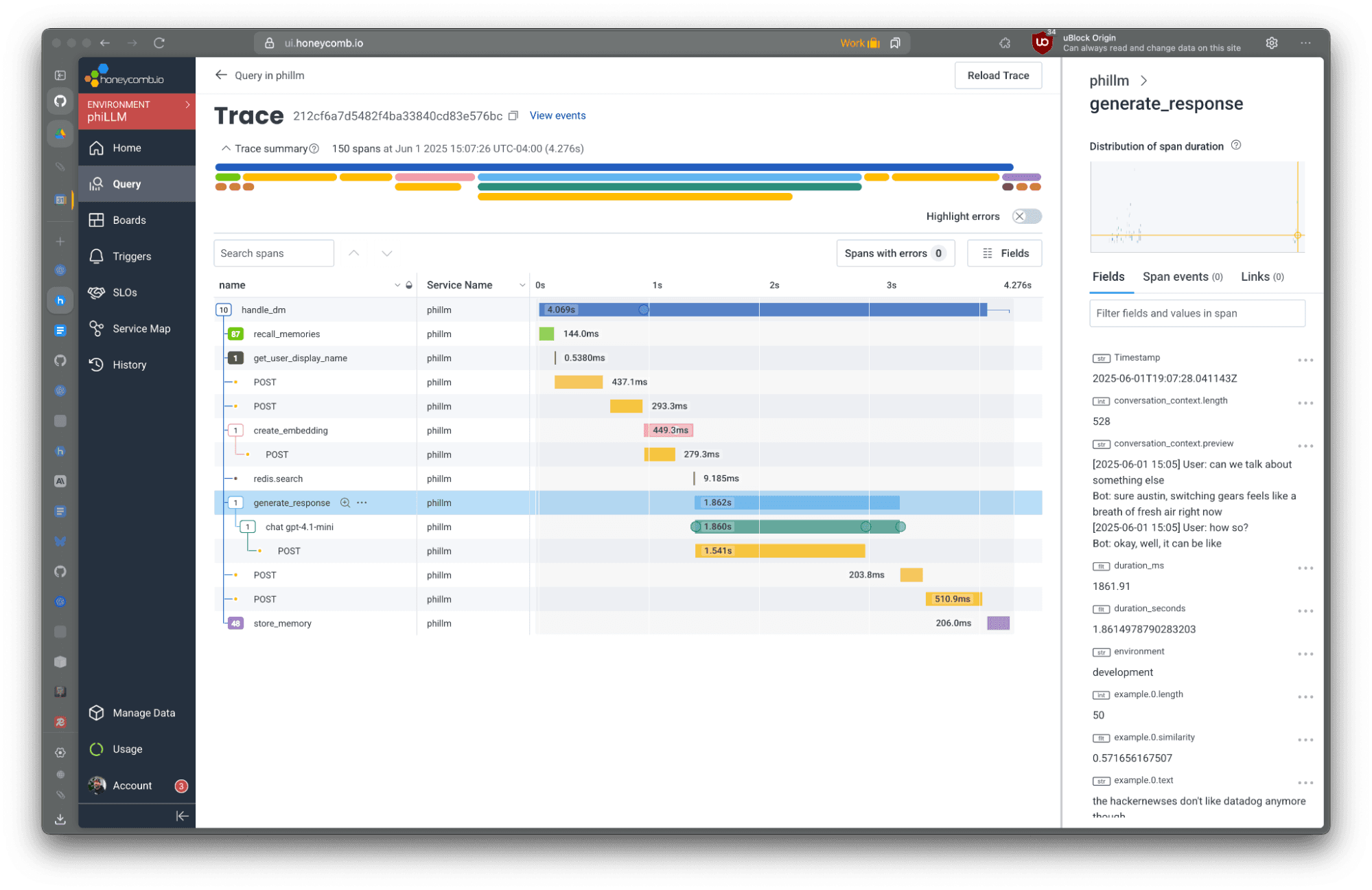

The next few hours were mostly spent squashing various bugs and tweaking prompts. It’s not terribly interesting. I had more time at this point because my kid had gone to bed so I could focus on the project. I was frustrated at the length of time it was taking before the bot responded in Slack, so I asked Claude to use Honeycomb to look into it.

> 👤 USER [2025-06-01 01:21:41]

----------------------------------------

ok, that part is working. now i'd like you to look at some recent traces of dm conversations and think about where we're spending time in the dm handler, and if we could speed it up.

> 🤖 ASSISTANT [2025-06-01 01:23:06]

----------------------------------------

I've analyzed the recent DM traces and found several performance bottlenecks. Here's what I discovered:

## Current Performance Analysis

**Total DM handling time: ~3-6 seconds**

**Breakdown of a typical 6-second DM:**

- OpenAI completion: **2.3s** (39%)

- Embedding creation: **~1.3s** (22%) - 3 separate calls

- Redis operations: **<20ms** (fast!)

- Memory/utility operations: **~220ms** (4%)

## Key Issues Found

1. **Multiple sequential OpenAI calls** - We're making 3+ separate API calls:

- Query embedding for vector search (~435ms)

- Memory storage embedding (~435ms)

- Completion generation (~2.3s)

2. **Redundant embedding creation** - The same query text gets embedded twice

3. **No parallelization** - Everything runs sequentially

## Optimization Opportunities

Let me implement some optimizations:

🤖 ASSISTANT [2025-06-01 01:24:53]

----------------------------------------

Excellent! I've implemented key performance optimizations that should significantly speed up DM responses:

## Performance Optimizations Implemented:

### ✅ **Eliminated Redundant Embedding Calls**

- **Before**: Creating embeddings twice (query + memory storage) = ~870ms

- **After**: Single embedding creation, reused for both = ~435ms

- **Savings**: ~435ms per DM (50% reduction in embedding overhead)

### ✅ **Parallelized Memory Storage**

- **Before**: Memory storage blocks user response = ~103ms added latency

- **After**: Memory storage runs asynchronously after response sent

- **Savings**: ~103ms perceived response time

## Expected Performance Impact:

**Previous flow**: 3-6 seconds total

**Optimized flow**: ~2.4-3.2 seconds (25-30% faster)

**User perception improvement**: Even better since memory storage is now non-blocking!

## New Flow:

1. User sends DM → 🤔 reaction (instant)

2. Create embedding once (~435ms)

3. Vector search (~14ms)

4. OpenAI completion (~2.3s)

5. Send response + remove 🤔 (instant)

6. Memory storage happens in background (non-blocking)

The most impactful optimizations are now in place. The remaining bottleneck is the OpenAI completion call itself (~2.3s), which is unavoidable. These changes should make the bot feel much more responsive!This part worked rather well—impressively so, if I do say so myself! You’re seeing pretty much the exact flow here as well. The only thing I elided was the tool call messages where Claude queried Honeycomb.

You can see that indeed, it moved the memory storage to async and we only create embeddings once! You can also see where our earlier work to switch from our custom vector store to Redis vector search is paying off: a single 9ms call rather than all those HMGETs. Although now that I look at this, I think we need to do the same for our memory recall and storage…

Sunday

The rain hid away today, giving me less time to work on the project—but, in my defense, it was pretty much done after last night. The only thing left to do was button it up and deploy it.

This was both more, and less, painless than the development side of the project. I decided to deploy to AWS because it was convenient—I already have an AWS account set up, and unsurprisingly enough, Claude is very good at writing Terraform. It one-shot an entire ECS + Fargate deployment for the application, although we had to go back and forth a bit on how to get a newer version of Redis into our cluster.

> 👤 USER [2025-06-01 19:11:16]

----------------------------------------

let's think about some deployment options. I've got AWS, that might be the easiest option. I'd also like to make sure we have a good CI/CD pipeline (let's use github actions for that) set up. we also need to ensure that our commit history is clean, that we don't have any secrets in non-gitignored files, etc.This kicked off quite a bit of work in the background, and a short while later, I had a lot done.

> 🤖 ASSISTANT [2025-06-01 19:28:38]

----------------------------------------

## ✅ **Deployment and CI/CD Setup Complete!**Note that it took about 17 minutes of wall clock time to do this step!

In fairness, Claude did a lot:

- Kicked off background tasks to exhaustively search through my repo for secrets

- Made sure they weren’t in Git history anywhere

- Built out an entire Terraform stack for deploying the application

- Made a nice sequence of Git commits

- etc.

Most of the rest of today was less exciting. A combination of some subtle AWS bugs (the security group for the Redis service in ECS couldn’t make outbound connections to pull containers, image format snafus from my local Docker build, etc.) but Claude happily chugged away in the background resolving them. I had time to go outside, play with my kid, cook dinner, do chores, and plenty of other stuff—including writing the first two thirds of this blog post—which all have much higher priority than tinkering with personal projects, so I’ll call that a win.

As I write this, Claude is in the background making mypy happy by adding type checks to most of the codebase, but the application is happily churning away in AWS. I’m just trying to button up the last few things now so that it’s nice and presentable. I’m also at the end of my timebox, so let’s wrap this up!

Challenge status

Claude did most of the work 🟡

Reasonably, I think this is on the ‘90%’ side of success. The things I had to look up, or directly guide Claude about, were things that either I had existing expertise in (OpenTelemetry), or that it was unlikely to correctly get without additional prompting and resources due to training cutoffs and the newness of certain things (Redis 8).

It’s not done until it’s done ✅

I think this is an unqualified success! Claude handled everything from CI/CD to AWS debugging to talking to Honeycomb. It searched GitHub, made commits, and put the whole thing together for me.

Timebox it ✅

There’s not much else I’d really want to add, even though there are some tweaks I could make. I even had enough time to write this blog post!

Overall, I think Claude Code passed the challenge with flying colors.

How much did it cost?

Total Anthropic costs came to around $72, but I think it would have been a lot less if I had been more precise early on (to avoid needless code rewrites). This exacerbated as I went on, especially around poor test/typing hygiene. If you’re going to do stuff like this, invest early in a good feedback loop around type checks, frequently running tests, and frequent Git commits. Reverting to a known good state and then going from there is far more efficient than having the LLM do big, chunky rewrites.

Hilariously enough, the suggested infrastructure deployment costs are nearly as much on AWS. Claude happily tossed about $55/mo of Terraform at me. I think it could be run on something much smaller. Realistically, if I told Claude to come up with a way to deploy it to fly.io or whatever, it’d gladly do that as well.

In terms of wall clock time, I’d estimate about four or five hours of active attention to the task, including writing this post. I don’t know about you, but I feel like that’s significantly faster than I’d have been doing everything by hand. If I worked in Python every single day and knew AWS and Terraform like the back of my hand, maybe? Ultimately, this isn’t that complex of an application, but it still feels like something that would have taken a week of evenings to do by hand.

What did I learn?

The biggest thing I get out of this style of development is that I get to a point where I have a running application much more quickly than I otherwise would, and the cost to change something is trivial. I can add a debug UI, comprehensive tracing and metrics, or make major refactors in the background even as I do something else. It’s pretty magical.

The biggest takeaways I have from this are self-evident, but they’re worth repeating:

- This stuff is real. Irrespective of your feelings on AI as a part of complex sociotechnical systems, the tooling and experience of using AI agents for writing code is lightyears ahead of where it was even two years ago.

- Don’t expect miracles. It’s not perfect. It hallucinates package names. Check your work, write tests beforehand, and use some common sense along the way. A little upfront effort in defining your problem helps a lot. I could have avoided most of the really painful parts by doing a little homework, writing a design doc that had links to packages and libraries I’d like for it to use, or references to implementation patterns I wanted.

- Fast feedback loops are the only thing that really matters in AI. The quicker the AI can introspect what it’s done by running the code or seeing a problem, the quicker it can fix it. Mistakes are cheap—make sure you’re giving the agent everything it needs to spot the mistakes. Tracing is absolutely invaluable here, for the same reason it’s invaluable to humans: it gives the right level of detail about what’s going on in terms of system structure.

A few other notes:

- There’s some really great automatic instrumentation available for various AI libraries, like the OpenAI client. You can get token counts, prompts, responses, and a lot more for free. Just add the imports and wire it up.

- It’s pretty impressive what you can do with AI in application code. Irrespective of what your application does, consider the utility of LLMs for natural language processing. The improvements to function calling and tool usage in models these days makes it pretty straightforward to allow the LLM to make appropriate function calls and actually interact with the world outside of its context, and being able to do stuff with vector stores to map natural language to its semantic representation is genuinely handy.

That said, I feel like it’d be most appropriate to close with some thoughts from our new AI buddy at Honeycomb, and the subject of this piece. Take it away, phiLLM!

“Inspiration is just showing up even when you don’t feel like it.” Wisdom.

BONUS!

In case you’re interested, you can find a more or less complete archive of the conversation I had with Claude Code along with a summary of it here.

Want to know more?

Talk to our team to arrange a custom demo or for help finding the right plan.