How to Replace Synthetics with the httpcheck Receiver

You've probably seen it: your health check endpoint returns success, but your users are staring at an error page. This is the reality of monitoring HTTP endpoints in production—status codes alone don't tell the whole story.

By: Mike Terhar

The Director’s Guide to Observability: Leveraging OpenTelemetry for Success

Read More

A 200 OK doesn't always mean everything is okay.

You've probably seen it: your health check endpoint returns success, but your users are staring at an error page. Maybe the database connection pool is exhausted, or a critical downstream service is timing out, but your API dutifully returns 200 because technically it responded. This is the reality of monitoring HTTP endpoints in production—status codes alone don't tell the whole story.

The OpenTelemetry Collector's httpcheck receiver has been a reliable tool for basic monitoring since its introduction. It sends requests to your endpoints, captures response times, and records status codes. For simple availability checks, that's often enough—but when you need to verify that your endpoints are healthy and not just responding, you've historically needed other tools or custom validation logic.

Not anymore.

New to Honeycomb? Get your free account today.

Get access to distributed tracing, BubbleUp, triggers, and more.

Up to 20 million events per month included.

What changed?

As of version 0.134.0, the httpcheck receiver now includes the ability to:

- Send specific headers

- Send body contents

- Inspect response bodies

- Validate JSON structures

- Match against regex patterns

- Verify response sizes

It also includes additional timing mechanisms for:

- DNS resolution time

- Client TCP connection

- TLS handshake

- Request duration

- Response duration

Essentially, in addition to seeing when things work and fail, you can now see when things degrade or delay.

What does this look like in Honeycomb?

Metrics in Honeycomb are grouped by tags.

http.url: "https://refinery.example.com/ready"

httpcheck.client.request.duration: 191

httpcheck.dns.lookup.duration: 119

httpcheck.duration: 214

httpcheck.response.duration: 10

httpcheck.response.size: 30

httpcheck.tls.handshake.duration: 45

http.url: "https://refinery.example.com/ready"

httpcheck.client.connection.duration: 23

network.transport: "tcp"These are the timing events for how long each aspect of the connection took. The client.connection.duration metric is in a separate event because it has the network.transport tag on it.

Similarly, the validation.type tag on the httpcheck.validation.passed metric isolates it from the others in the batch. It looks like this:

http.url: "https://refinery.example.com/ready"

httpcheck.validation.passed: 1

httpcheck.validation.failed: 0

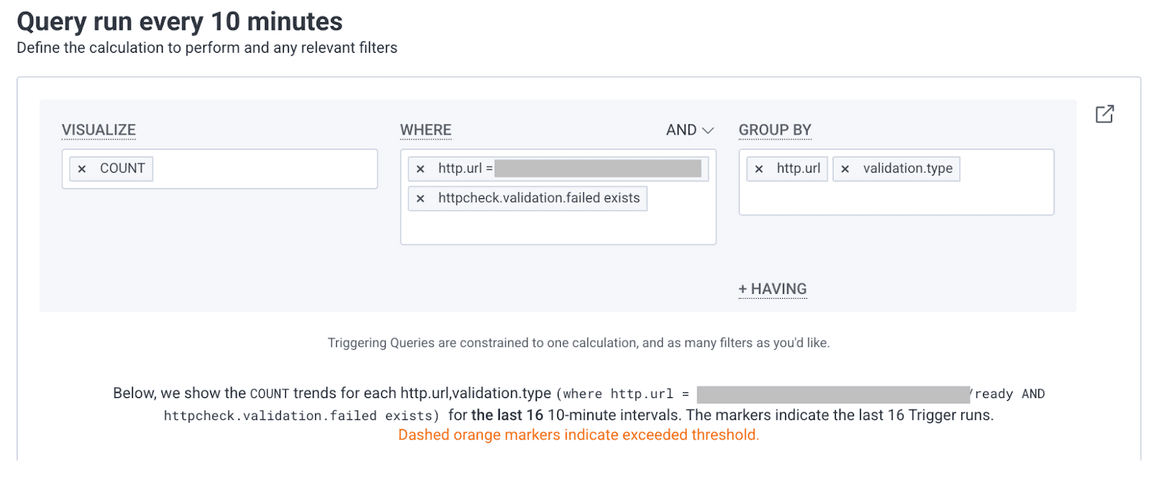

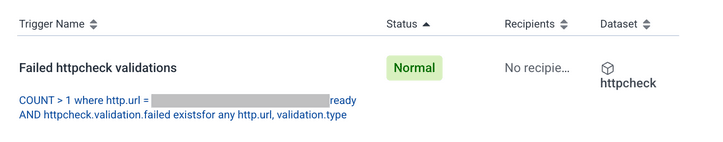

validation.type: "contains"If you want to see where there are httpcheck failures, you can make a query that looks for where httpcheck.validation.failed exists.

You end up with a nice trigger that can tell you within 10 minutes of a validation failure.

String matching for smoke tests

The simplest validation is checking if the response contains (or doesn't contain) specific text. This catches those friendly error pages that return 200 but display, "Oops, something went wrong."

receivers:

httpcheck:

targets:

- endpoint: "https://api.example.com/health"

validations:

- contains: "healthy"

- not_contains: "error"If your health endpoint returns {"status": "healthy", "version": "1.2.3"}, the contains validation passes. If it starts returning {"status": "error", "message": "database connection failed"}, your metrics will reflect that failure even though the HTTP status might still be 200.

JSON path validation for structured responses

When your APIs return JSON (and let's be honest, most do), you want to validate specific fields. Say your health endpoint returns something like this:

{

"status": "ok",

"version": "2.1.4",

"services": [

{"name": "database", "status": "up"},

{"name": "cache", "status": "up"},

{"name": "queue", "status": "up"}

]

}The httpcheck receiver uses gjson syntax for JSON path queries, which handles nested objects and arrays elegantly:

receivers:

httpcheck:

targets:

- endpoint: "https://api.example.com/health"

validations:

- json_path: "status"

equals: "ok"

- json_path: "services[*].status"

equals: "up"This configuration validates that the top-level status is "ok" and that all services in the array have status "up." When your database or cache reports degraded performance, your synthetic check will catch it immediately rather than waiting for user complaints.

With multiple validations, the integer for each of validation.passed or validation.failed will increment for each success or failure.

Regex validation for flexible matching

Sometimes you need more sophisticated pattern matching. Maybe your health endpoint returns timestamps, version strings, or formatted messages that vary but should match a certain pattern.

receivers:

httpcheck:

targets:

- endpoint: "https://api.example.com/health"

validations:

- regex: "^HTTP/[0-9.]+ 200"

- regex: "uptime: \\d+ seconds"The regex validation gives you flexibility when exact string matching is too rigid but you still need to verify the response format.

Response size validation

Unexpectedly large or small responses often indicate problems. An error page might be much smaller than your normal response, or a runaway query might return megabytes when you expected kilobytes.

receivers:

httpcheck:

targets:

- endpoint: "https://api.example.com/users"

validations:

- min_size: 100

- max_size: 10240If your user list endpoint suddenly returns 50 bytes instead of the usual 2KB, something's wrong—even if the status code says otherwise.

The metrics you get

When you enable validations, the httpcheck receiver generates two new metrics that help you track validation success:

receivers:

httpcheck:

metrics:

httpcheck.validation.passed:

enabled: true

httpcheck.validation.failed:

enabled: trueEach metric includes a validation.type attribute indicating which validation ran (contains, json_path, size, or regex). This lets you see not just that a validation failed, but which validation failed—and how often.

Combined with the existing timing breakdown metrics, you get a complete picture of endpoint health and performance.

Timing breakdown improvements

Speaking of timing metrics, the httpcheck receiver also picked up better granularity for understanding where time is spent in each request:

receivers:

httpcheck:

metrics:

httpcheck.dns.lookup.duration:

enabled: true

httpcheck.client.connection.duration:

enabled: true

httpcheck.tls.handshake.duration:

enabled: true

httpcheck.client.request.duration:

enabled: true

httpcheck.response.duration:

enabled: trueThese metrics help you answer questions like, "Is the slowness from DNS resolution, TLS negotiation, or is the server just taking forever to respond?" This is valuable when troubleshooting degraded performance across regions or when investigating intermittent latency spikes.

Enable everything!

Let's put it all together with a realistic configuration for monitoring a REST API:

receivers:

httpcheck:

collection_interval: 30s

metrics:

httpcheck.dns.lookup.duration:

enabled: true

httpcheck.client.connection.duration:

enabled: true

httpcheck.tls.handshake.duration:

enabled: true

httpcheck.client.request.duration:

enabled: true

httpcheck.response.duration:

enabled: true

httpcheck.validation.passed:

enabled: true

httpcheck.validation.failed:

enabled: true

httpcheck.response.size:

enabled: true

targets:

- endpoint: "https://api.example.com/health"

method: "GET"

validations:

- json_path: "status"

equals: "healthy"

- json_path: "database.status"

equals: "connected"

- json_path: "cache.status"

equals: "connected"

- contains: "version"

- min_size: 50

- max_size: 2048

- endpoint: "https://api.example.com/users/1"

method: "GET"

headers:

Authorization: "Bearer ${env:API_TOKEN}"

validations:

- json_path: "id"

equals: "1"

- regex: "^[\\w.-]+@[\\w.-]+\\.\\w+$"

- not_contains: "error"This configuration checks two endpoints every 30 seconds. The health endpoint validates JSON structure and connection status for critical dependencies. The user endpoint verifies that authentication works and returns properly formatted data. Both collect detailed timing breakdowns and response size metrics.

Why this matters

If you leverage synthetic monitoring, you've probably cobbled together solutions from multiple tools. Maybe you're using the httpcheck receiver for basic availability, plus a separate service for content validation, and possibly some custom scripts for the edge cases. Each additional tool means more configuration to maintain, more systems to monitor, and more complexity in your telemetry pipeline.

These httpcheck improvements let you consolidate that logic. Your synthetic checks live in the same OpenTelemetry Collector configuration as the rest of your telemetry processing. The validation metrics flow through the same pipeline as your application metrics. You can correlate synthetic test failures with real user traffic patterns, all in Honeycomb.

This works for both public and private endpoints. Deploy a Collector in every region or environment where you need synthetic testing, configure your targets, and you're done! No need to whitelist IPs or worry about firewall rules for third-party monitoring services.

When not to use httpcheck validations

These validations are great for smoke tests and health checks, but they're not a replacement for full browser-based testing. If you need to test complex user flows, JavaScript rendering, or multi-step interactions, you'll want something like Selenium with OpenTelemetry integration or Playwright.

The httpcheck receiver excels at answering questions like, "Is this endpoint responding correctly?" and "Are my dependencies healthy?" It's less helpful for questions like, "Can users complete checkout?" or "Does the dashboard load properly in Safari?"

Also, if your validation logic becomes excessively complex—dozens of JSON path assertions, intricate regex patterns, conditional checks based on other fields—that's a signal you might want to create a dedicated health endpoint that does the heavy lifting and returns a simple pass/fail result.

Getting started

The httpcheck receiver ships with the OpenTelemetry Collector contrib distribution, so if you're using it, you already have access to these features. Simply update your configuration and restart the Collector.

If you're not using httpcheck yet, the receiver documentation has complete configuration examples. The validation syntax is straightforward, and you can start simple with a single contains check and add more sophisticated validations as you understand what failures look like in your system.

One tip: enable all the timing breakdown metrics initially, even if you don't think you need them. When an endpoint starts failing or responding slowly, having that granular timing data already flowing into Honeycomb makes diagnosis much faster. You can always disable metrics you don't use after you see what's valuable.

What's next

The OpenTelemetry community continues improving the httpcheck receiver based on real-world use cases. If you run into scenarios these validations don't handle, or you have ideas for additional features, the opentelemetry-collector-contrib repository welcomes issues and pull requests.

In the meantime, these validation features give you much better signal about endpoint health. Status codes are a starting point, but knowing your API is returning well-formed, correct data is what matters for your users.

Give it a try! If you run into interesting use cases worth sharing, come discuss them in our Pollinators Slack. We're always curious to see how people are using synthetic monitoring in production.