If it Wanted to, it Would: The Bitter Lesson for LLM Users

Systems, like people, have things they "want" to do. Each model has patterns of reasoning and synthesis it performs naturally. Power users learn to align with these representational grooves to get the "best" results and the highest-signal reasoning per token.

By: Hannah Henderson

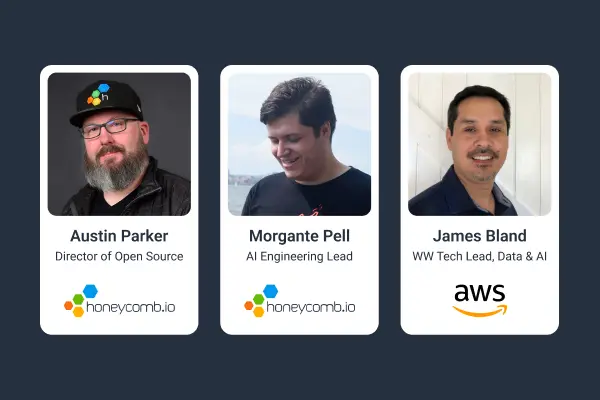

Introducing Honeycomb MCP: Your AI Agent’s New Superpower

Watch Now

There’s a viral saying folks use about flaky crushes, spouses, and forgetful friends: "if he wanted to, he would." The idea is straightforward: when someone cares, they make the effort. As it turns out, the same principle applies surprisingly well to AI.

Systems, like people, have things they "want" to do. Each model has patterns of reasoning and synthesis it performs naturally. Power users learn to align with these representational grooves to get the "best" results and the highest-signal reasoning per token.

And models are evolving with staggering speed. I recently heard Boris Cherny, creator of Claude Code, tell Stanford's Modern Software Developer class that good builders design for the model six months from now—and that, to get the most from LLMs, we should work with what the model will soon want.

Leverage AI-powered observability with Honeycomb Intelligence

Learn more about Honeycomb MCP, Canvas, and Anomaly Detection.

The original bitter lesson

In 2019, reinforcement-learning pioneer Rich Sutton summarized seventy years of AI research in a single bitter lesson: Every generation of researchers tries to build knowledge into their systems, and every generation watches those hand-crafted frameworks result in performance plateaus.

Real progress comes from search and learning: algorithms that explore vast spaces and improve from experience. Together, they define the scalable path to intelligence: systems that find and refine, not ones that know.

That’s why deep learning and scaling laws beat rule engines and expert systems: they grow with compute. As Huang’s Law continues to play out, and hardware, algorithms, and software rapidly co-evolve, so do exploration and adaptation.

That’s the real bitter lesson: while human insight can give a head start, general algorithms and compute win the race.

The new bitter lesson: for users

The same lesson now plays out on the prompt line. Each of us faces the researcher's choice: encode our own cleverness or let the model explore. Constrain a model too tightly (i.e., “say exactly this, in exactly this tone”) and you collapse its prediction space. Fewer possibilities, less synthesis, more mediocrity.

There’s a time and place for control: when accuracy, safety, or immediate professionalism matter, constraints beat exploration. But outside of those situations, you’ll get more out of a model by prompting for idea creation and analysis. Use it to co-explore.

When you overtighten prompts, you:

- Shrink the model's reasoning space, reducing generative depth

- Amplify your framing and bias

- Block cross-domain synthesis

LLMs excel at latent pattern transfer (recombining abstractions across domains). Over-constraining them blocks this strength. The result is narrowed output. Your instructions define how the model can think, limiting how it might want to think.

Sidebar: when machines teach themselves

The pattern repeats across domains. Anthropic’s Constitutional AI project showed that models improve when guided by principles rather than micromanaged instructions. A recent Nature paper, Discovering state-of-the-art reinforcement learning algorithms, found that reinforcement-learning agents can now discover algorithms that outperform those manually designed by humans.

The lesson is the same: whether improving alignment or autonomy, computation outscales human cleverness.

Achieving maximum leverage: harmonize with the model

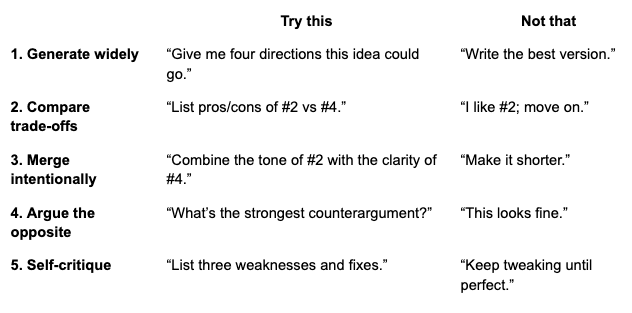

To work with the model rather than against it, use this simple five-step loop: generate widely → compare tradeoffs → merge intentionally → argue the opposite → self-critique.

This loop mirrors how learning systems operate: broad exploration followed by compression and refinement.

The six-month mindset: Sutton’s Law for builders

Cherny’s advice, to design for the model six months from now, extends Sutton’s lesson from research to product strategy. Sutton observed that systems that scale with compute always outperform handcrafted ones, especially as computing power continues to grow. Cherny encouraged builders to stay ahead by optimizing not for today’s constraints but for those of the near future.

The best teams prototype ahead of capability, trusting that inference will get faster (and cheaper!) and reasoning deeper. They treat cost and latency gaps as temporary, not terminal.

When you build for what’s coming:

- Today's constraints stay temporary. Ankur Goyal (Braintrust) encourages teams to write future-facing evals: tests meant to fail today and pass as models evolve. They act as automatic alerts that new capabilities, and new value, unlock.

- Early experiments compound as models improve.

- Roadmaps track with the slope of progress, not a snapshot of performance. (“The slope” refers to the rate and direction of improvement in model capability and economics. Designing for the slope means building systems that can take immediate advantage of each step forward in performance or cost.)

A rising tide raises all ships. The frontier model arms race is your roadmap's not-so-secret weapon. Builders should architect systems that expand with the models. They should scaffold products that can become more capable simply by swapping in stronger reasoning engines.

Conclusion

Sutton called it the bitter lesson: general methods that scale with compute always beat handcrafted cleverness. Cherny extended it for builders: design not for today’s snapshot, but for the slope of progress.

For researchers, that means trusting search and learning over rules. For builders, designing systems that get better as the models do. For users, prompting to explore before constraining. In all cases, the goal is the same: work with computation’s gradient, not against it. Precision ensures control; exploration creates discovery.

If the model wanted to, and you let it, it would. And soon, it will.